Nvidia earnings will show just how much Big Tech is spending on AI. Here’s what companies from Microsoft to OpenAI have said so far.

Elon Musk (top left), Jensen Huang (top center), Sam Altman (top right), Mark Zuckerberg (bottom left), Sundar Pichai (bottom right).

Tech giants Amazon, Google, Meta, and Microsoft are on track to spend a staggering $185 billion on AI in 2024, according to a JPMorgan analysis — and Nvidia is powering the revolution.

Most of the spending from these companies is going towards hardware and software that enables computers to process large amounts of data. The majority of those computing resources are Nvidia graphics processing units, which are estimated to go for $30,000 to $40,000 per chip.

As anticipation ramps up for Nvidia’s second-quarter earnings release on Wednesday, the chipmaker will help investors determine whether AI investments are paying off and whether the frenzy is set to die down anytime soon.

Recent doubt from investors has followed major investments in the industry as the AI race has heated up. Big Tech companies are both spending big on their own technology and strategically investing in external AI ventures.

Microsoft has invested $13 billion in OpenAI, for example. Amazon invested $4 billion in its partnership with Anthropic, whose chatbot Claude rivals ChatGPT. Google, which also competes with OpenAI, also invested $2 billion in Anthropic.

Meantime, OpenAI itself has invested in its fair share of startups, including in humanoid robot companies FigureAI and 1X Technologies. OpenAI CEO Sam Altman has also put money into several AI health startups, like Thrive AI Health.

And OpenAI has a notable partnership with Apple to bring ChatGPT to some Apple products, though no financial terms have been disclosed about the deal.

It’s no secret that companies are spending on AI. But while Big Tech has publicized their investments into other companies, they’ve remained opaque on what they’re spending on scaling their own AI offerings, from buying chips to hiring experts to building data centers.

Here’s a list of the Big Tech companies and what we know about their AI investments.

Google has positioned itself as an AI-first company. Pictured: Alphabet CEO Sundar Pichai.

Google has positioned itself as an AI-first company and released a plethora of offerings over the last few months, although has had to roll back some because of inaccuracies.

But executives remained fairly cryptic about its AI spending in the company’s second-quarter earnings call last week.

Executives haven’t provided any hard numbers on the company’s AI investments but continued to emphasize that its AI ambitions will produce long-term returns. For now, what we do know is Google spent $3 billion to build and expand its data centers, and $60 million to train AI on Reddit posts.

OpenAI

OpenAI CEO Sam Altman.

OpenAI’s operating costs may be as high as $8.5 billion this year, according to The Information. That might be more than the startup can afford, raising concerns about the company’s profitability — especially as its competitors offer free versions of their chatbots.

The costs include about $4 billion to rent server capacity from Microsoft, $3 billion to train the AI models with new data, and $1.5 million on labor costs, the report said.

Meta

Mark Zuckerberg at the UFC 300 event in Las Vegas in April.

Meta’s CEO, Mark Zuckerberg, said he plans to purchase 350,000 Nvidia GPUs by the end of 2024, bringing Meta’s GPU collection to roughly 600,000. Analysts have estimated the giant could have spent about $18 billion by the end of 2024.

In its second-quarter earnings on July 31, the company said it expects capex in the range of $37 billion—$40 billion for the year, more than a prior range of $35 billion—$40 billion. It also said that “we currently expect significant capital expenditures growth in 2025 as we invest to support our artificial intelligence research and product development efforts.”

A JPMorgan analyst said the company’s costs, fueled by AI spending, could hit $50 billion by 2025, according to a report from Quartz.

Zuckerberg said the big focus is “figuring out the right amount” of infrastructure for the AI future. Meta is planning the computing clusters and data centers for the next several years, he said, but it’s “hard to predict” how that’ll pan out.

Apple

Apple’s CFO said the company spent about $100 billion over the last five years on research and development. Pictured: CEO Tim Cook.

In a first-quarter earnings call, Apple’s CFO Luca Maestri said the company spent about $100 billion over the last five years on research and development, but didn’t dive indicate how much of that was spent on AI according to a report from MarketWatch.

In its third quarter earnings call on Thursday, CEO Tim Cook reiterated the same message and said the company has been investing in AI and machine learning for years.

The company spent a little over $8 million in research and development in the third quarter, slightly up from how much it spent last year. The CEO said we can expect to see a “year over year” increase in AI spending.

In terms of its capital expenditures, Cook emphasized the company’s “hybrid” approach, where it combines in-house work with external partnerships — like its collaboration with OpenAI.

Microsoft

Leaked documents show Microsoft’s plans to obtain 1.8 million AI chips. Pictured: CEO Satya Nadella.

Leaked documents earlier this year revealed the company’s plans to obtain 1.8 million AI chips by the end of the year and ramp up its data center capacity.

During its fourth quarter earnings call on Tuesday, Microsoft executives said AI-related spending made up nearly all of the $19 billion spent on capital expenditures. Roughly half was for infrastructure, and the remaining cloud and AI-related spending was mostly for CPUs and GPUs.

But Microsoft hasn’t quite seen returns on their investment yet. Azure growth hit right below analyst estimates, and shares dropped by over 6% in post-market trading.

Amazon

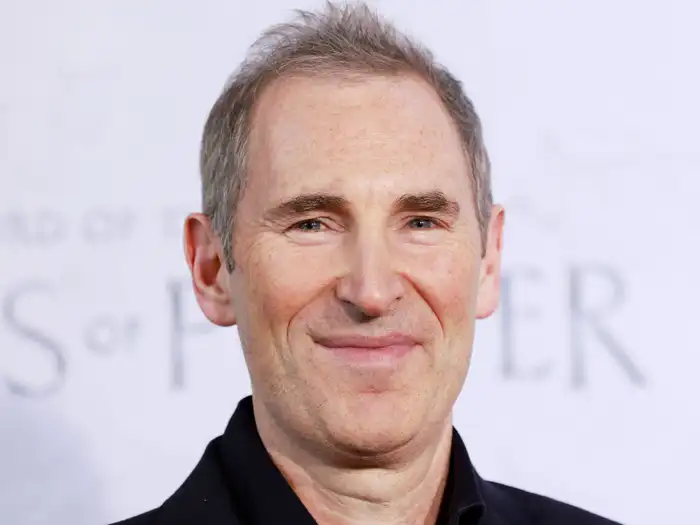

Amazon said it will invest up to $230 million in startups building generative AI applicants. Pictured: CEO Andy Jassy.

Amazon is working on an AI chatbot to compete directly with OpenAI’s ChatGPT, called Metis, and has been trying to make its own AI chips to compete with Nvidia, albeit with limited success.

Meantime, it plans to spend almost $150 billion in the coming 15 years on data centers, Bloomberg reported.

Amazon is also planning to invest up to $230 million into startups building generative AI-powered applications.

About $80 million of the investment will fund Amazon’s second AWS Generative AI Accelerator program. The program will position Amazon as a go-to cloud service for startups building generative AI products.

A lot of the new investment is in the form of compute credits for AWS infrastructure, which means it isn’t able to be transferred to other cloud service providers like Google and Microsoft.

xAI

Elon Musk.

Elon Musk said earlier this month that the latest version of xAI’s chatbot Grok would train on 100,000 H100s. Nvidia’s H100 GPUs help handle data processing for large language models, and the chips are a key component in scaling AI.

The GPUs are estimated to cost between $30,000 and $40,000 each, meaning xAI is spending between $3 and $4 billion on AI chips.

It’s worth noting that it’s not clear if Musk’s company purchased those chips outright. It’s possible to rent GPU compute from cloud service providers, and The Information reported in May that xAI was in discussions with Oracle about a $10 billion investment to rent cloud servers.