Chip giant Nvidia wants to bring robots to the hospital

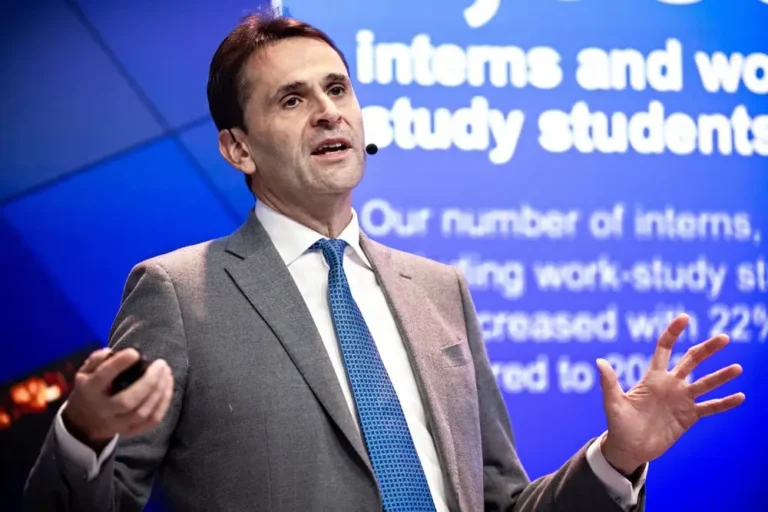

Kimberly Powell, Nvidia’s VP of healthcare, shared the tech giant’s bold vision for healthcare transformation in AI — including robots for health systems.

Nvidia is digging deeper into healthcare — and the tech giant has big ambitions to bring AI to every part of the hospital.

The AI behemoth has already made a number of investments and notched partnerships in digital health and biotech, including for virtual care and drug discovery.

After massive developments in large language models in the past two years, according to Nvidia’s VP of Healthcare Kimberly Powell, the next wave of AI will turn medical devices into robots.

“This physical AI thing is coming where your whole hospital is going to turn into an AI,” Powell told B-17. “You’re going to have eyes operating on your behalf, robots doing what is otherwise automatable work, and smart digital devices. So we’re super excited about that, and we’re doing a lot of investments.”

Physical AI refers to “models that can perceive, understand, and interact with the physical world,” Powell said during a keynote presentation at this year’s HLTH conference in Las Vegas.

In healthcare, she told B-17, there are countless applications for this next wave. Nvidia is building out its tech stack and partnering with select startups to spearhead the company’s AI push into more health systems.

The efforts are part of Nvidia’s vision to corner the emerging advanced robotics market to maintain its spot in the tech stratosphere.

It’ll take healthcare “a couple of years” to fully embrace the physical AI wave, Powell said — but with key components of physical AI already being adopted in healthcare, that transformation could be closer than we think.

Hospitals’ “digital twins”

While recent AI breakthroughs have made more sophisticated robotic developments within reach, robots still present unique challenges to Nvidia and other companies developing them.

Powell said these physical AI systems would essentially require three computers working in tandem: one to train the AI, another to simulate the physical world in the digital space, called a “digital twin,” and a third to operate the robot.

The robots need not only to be able to receive and respond to inputs from the real world, like movement and speech but to be trained in their physics — like the grip and amount of pressure required to use a scalpel, for example.

To tackle the digital twin challenge in healthcare, Nvidia is partnering with IT solutions provider Mark III, which works with healthcare systems like the University of Florida Health to create simulations of their hospital environments and develop AI using them.

Hospitals can use those digital twins to train their clinicians, too, Powell said, like by letting doctors learn how to perform a particular surgery first in the virtual operating room.

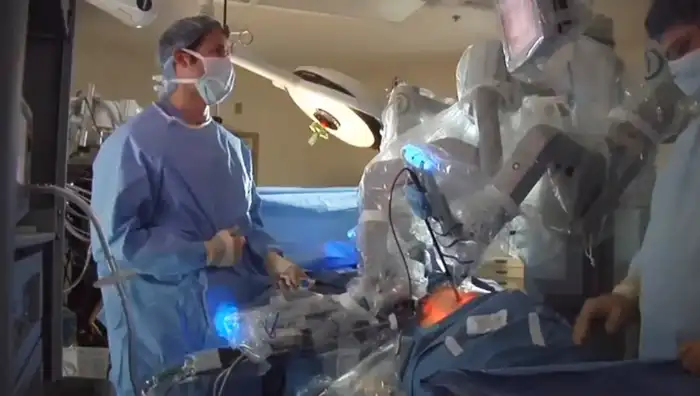

Physical AI is getting more sophisticated for real-time surgeries, too. Nvidia is an investor in Moon Surgical, a robotics company that uses Nvidia’s Holoscan platform for its surgical assistant robot, which provides an extra set of arms to hold and manipulate surgical instruments, like adjusting the cameras used inside the body during laparoscopic surgeries.

But Nvidia sees robots being used far beyond the operating room — including to monitor a patient for falls in their hospital room, or bring them fresh linens, Powell said. She pointed to Mayo Clinic, which started deploying its own linen delivery robots earlier this year at its Florida campus to autonomously ferry fresh sheets from room to room.

“You could imagine someday when you get an X-ray scan, you’ll have a room that you just step into. It has cameras such that it knows where you’re in the vicinity of this scanner; it’ll tell you where to stand in front of the scanner. It’ll take an X-ray, it’ll check the quality,” Powell said.

Intuitive Surgical’s long-standing surgical robotics offering, called the da Vinci Surgical System, also helps surgeons operate on soft tissue.

Nvidia’s digital health ambitions

Nvidia doesn’t want to be a healthcare company, and would never acquire another healthcare company, Powell said. But in digital health, the AI colossus is putting its money and tech behind a number of startups.

Medical scribe company Abridge is no doubt Nvidia’s buzziest healthcare bet. Nvidia’s venture capital arm, NVentures, invested in Abridge in March; Abridge is now reportedly raising more money at a $2.5 billion pre-money valuation. Powell said Nvidia is doing “deep, deep speech research” with Abridge.

General Catalyst-backed Hippocratic AI also snagged funding from Nvidia in September, after notching a partnership with the company this spring to develop healthcare AI agents that can video chat with patients.

And Nvidia is teaming up with Microsoft to supercharge the next generation of AI startups in healthcare and life sciences. The two companies said at HLTH that they’re bringing together Nvidia’s accelerator, Nvidia Inception, and Microsoft’s free product platform for founders, Microsoft for Startups, to provide cloud credits, AI tools, and expert support to startups, starting with healthcare and life sciences companies.