Nvidia’s Blackwell era will test the world’s most valuable company in a highwire act

Nvidia’s new AI chip, Blackwell, is key to the company’s next growth phase.

Nvidia looks set to end the year as confidently as it started. How next year plays out will significantly depend on the performance of Blackwell, its next-generation AI chip.

The Santa Clara-based chip giant reminded everyone why it has grown more than 200% this year to become the world’s most valuable company. On Wednesday, it reported another blowout earnings. Revenue hit $35.1 billion in its third quarter, up 94% from a year ago.

But despite the strong earnings, which Wedbush analyst Dan Ives said “should be framed and hung in the Louvre,” investors remained cautious as they focused their attention on the highwire act Nvidia must pull off with Blackwell.

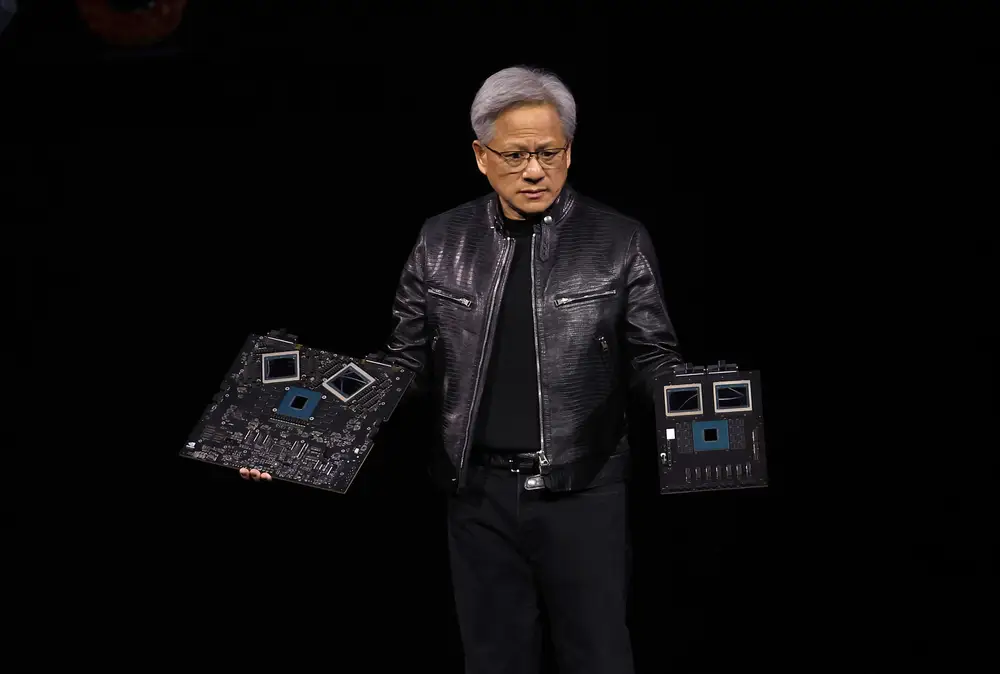

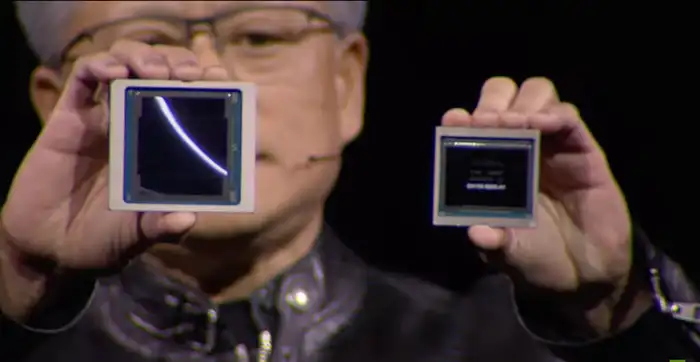

The new chip, known as a GPU, was first unveiled by CEO Jensen Huang at the company’s GTC conference in March. It was revealed as a successor to the Hopper GPU that companies across Silicon Valley and beyond have used to build powerful AI models.

While Nvidia confirmed on Wednesday that Blackwell is now “in full production,” with 13,000 samples shipped to customers last quarter, signs emerged to suggest that Nvidia faces a difficult path ahead as it prepares to scale up its new-era GPUs.

Nvidia must navigate complex supply chains

First, Blackwell is what Nvidia CFO Colette Kress called a “full-stack” system.

That makes it a beast of machinery that needs to be fit for an incredibly wide range of specific needs from a variety of customers. As she told investors on the earnings call on Wednesday, Blackwell is built with “customizable configurations” to address “a diverse and growing AI market.” That includes everything from “x86 to Arm, training to inferencing GPUs, InfiniBand to Ethernet switches,” Kress said.

Nvidia will also need incredible precision in its execution to satisfy its customers. As Kress said on the earnings call, the line for Blackwell is “staggering,” with the company “racing to scale supply to meet the incredible demand customers are placing on us.”

To achieve this, it’ll need to focus on two areas. First, meeting demand for Blackwell will mean efficiently orchestrating an incredibly complex and widespread supply chain. In response to a question from Goldman Sachs analyst Toshiya Hari, Huang reeled off a near-endless list of suppliers contributing to Blackwell production.

Huang holds up the Blackwell on the left and its predecessor, the H100, on the right.

There were Far East semiconductor firms TSMC, SK Hynix, and SPIL; Taiwanese electronics giant Foxconn; Amphenol, a producer of fiber optic connectors in Connecticut; cloud and data center specialists like Wiwynn and Vertiv, and several others.

“I’m sure I’ve missed partners that are involved in the ramping up of Blackwell, which I really appreciate,” Huang said. He’ll need each and every one of them to be in sync to help meet next quarter’s guidance of $37.5 billion in revenue. There had been some recent suggestions that cooling issues were plaguing Blackwell, but Huang seemed to suggest they had been addressed.

Kress acknowledged that the costs of the Blackwell ramp-up will lead to gross margins dropping by a few percentage points but expects them to recover to their current level of roughly 75% once “fully ramped.”

All eyes are on Blackwell’s performance

The second area Nvidia will need to execute with absolute precision is performance. AI companies racing to build smarter models to keep their own backers on board will depend on Huang’s promise that Blackwell is far superior in its capabilities to Hopper.

Reports so far suggest Blackwell is on track to deliver next-generation capabilities. Kress reassured investors on this, citing results from Blackwell’s debut last week on the MLPerf Training benchmark, an industry test that measures “how fast systems can train models to a target quality metric.” The Nvidia CFO said Blackwell delivered a “2.2 times leap in performance over Hopper” on the test.

Collectively, these performance leaps and supply-side pressures matter to Nvidia for a longer-term reason, too. Huang committed the company to a “one-year rhythm” of new chip releases earlier this year, a move that effectively requires the tech giant to showcase a vastly more powerful variety of GPUs each year while convincing customers that it can dole them out.

While performance gains seem to be showing real improvements, reports this year have suggested that pain points have emerged in production that have added delays to the rollout of Blackwell.

Nvidia remains ahead of rivals like AMD

For now, investors appear to be taking a wait-and-see approach to Blackwell, with Nvidia’s share price down less than a percentage point in pre-market trading. Hamish Low, research analyst at Enders Analysis, told B-17 that “the reality is that Nvidia will dominate the AI accelerator market for the foreseeable future,” particularly as “the wave of AI capex” expected from tech firms in 2025 will ensure it remains “the big winner” in the absence of strong competition.

“AMD is a ways behind and none of the hyperscaler chips are going to be matching that kind of volume, which gives Nvidia some breathing room in terms of market share,” Low said.

As Low notes, however, there’s another reality Nvidia must reckon with. “The challenge is the sheer weight of investor expectations due to the scale and premium that Nvidia has reached, where anything less than continually flying past every expectation is a disappointment,” he said.

If Blackwell misses those expectations in any way, Nvidia may need to brace for a fall.