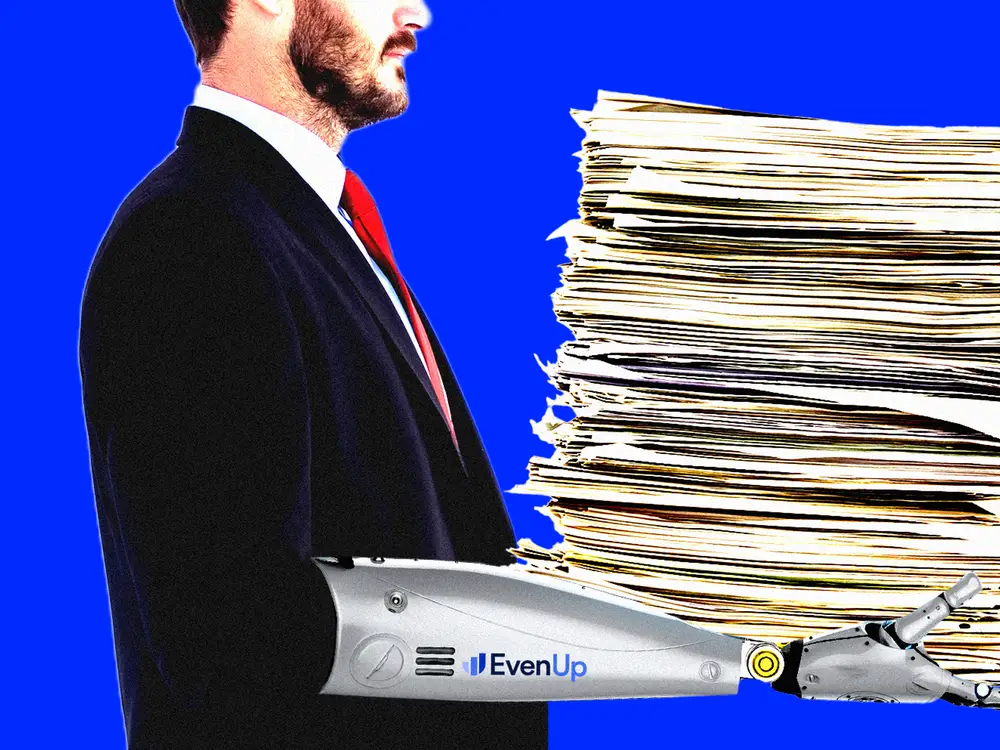

EvenUp’s valuation soared past $1 billion on the potential of its AI. The startup has relied on humans to do much of the work, former employees say.

EvenUp vaulted past a $1 billion valuation on the idea AI could help automate a lucrative part of the legal business. Former employees told B-17 that the startup has relied on humans to complete much of the work.

EvenUp aims to streamline personal-injury demands and has said it is one of the fastest-growing companies in history after jumping from an $85 million valuation at the start of the year to unicorn status in an October funding round.

Customers upload medical records and case files, and EvenUp’s AI is supposed to sift through the vast amount of data, pulling out key details to determine how much an accident victim should be owed.

One of EvenUp’s investors has described its “AI-based approach” as representing a “quantum leap forward.”

The reality, at least so far, is that human staff have done a significant share of that work, and EvenUp’s AI has been slow to pick up the slack, eight former EvenUp employees told B-17 in interviews over the late summer and early fall.

The former employees said they witnessed numerous problems with EvenUp’s AI, including missed injuries, hallucinated medical conditions, and incorrectly recorded doctor visits. The former employees asked not to be identified to preserve future job prospects.

“They claim during the interview process and training that the AI is a tool to help the work go faster and that you can get a lot more done because of the AI,” said a former EvenUp employee who left earlier this year. “In practice, once you start with the company, my experience was that my managers told me not even to use the AI. They said it was unreliable and created too many errors.”

Two other former employees also said they were told by supervisors at various points this year not to use EvenUp’s AI. Another former employee who left this year said they were never told not to use the AI, just that they had to be vigilant in correcting it.

“I was 100% told it’s not super reliable, and I need to have a really close eye on it,” said the former employee.

EvenUp told B-17 it uses a combination of humans and AI, and this should be viewed as a feature, not a bug.

“The combined approach ensures maximum accuracy and the highest quality,” EvenUp cofounder and CEO Rami Karabibar said in a statement. “Some demands are generated and finalized using mostly AI, with a small amount of human input needed, while other more complicated demands require extensive human input but time is still saved by using the AI.”

AI’s virtuous cycle of improvement

It’s a common strategy for AI companies to rely on humans early on to complete tasks and refine approaches. Over time, these human inputs are fed into AI models and related systems, and the technology is meant to learn and improve. At EvenUp, signs of this virtuous AI cycle have been thin on the ground, the former employees said.

“It didn’t seem to me like the AI was improving,” said one former staffer.

“Our AI is improving every day,” Karabibar said. “It saves more time today than it did a week ago, it saved more time a week ago than it did a month ago, and it saved a lot more time a month ago than it did last year.”

A broader concern

EvenUp’s situation highlights a broader concern as AI sweeps across markets and boardrooms and into workplaces and consumers’ lives. Success in generative AI requires complex new technology to continue to improve. Sometimes, there’s a gap between the dreams of startup founders and investors and the practical reality of this technology when used by employees and customers. Even Microsoft has struggled with some practical implementations of its marquee AI product, Copilot.

While AI is adept at sorting and interpreting vast amounts of data, it has so far struggled to accurately decipher content such as medical records that are formatted differently and often feature doctors’ handwriting scribbled in the margins, said Abdi Aidid, an assistant professor of law at the University of Toronto who has built machine-learning tools.

“When you scan the data, it gets scrambled a lot, and having AI read the scrambled data is not helpful,” Aidid said.

Earlier this year, B-17 asked EvenUp about the role of humans in producing demand letters, one of its key products. After the outreach, the startup responded with written answers and published a blog post that clarified the roles employees play.

“While AI models trained on generic data can handle some tasks, the complexity of drafting high-quality demand letters requires much more than automation alone,” the company wrote. “At EvenUp, we combine AI with expert human review to deliver unmatched precision and care.”

The startup’s spokesman declined to specify how much time its AI saves but told B-17 that employees spend 20% less time writing demand letters than they did at the beginning of the year. The spokesman also said 72% of demand letter content is started from an AI draft, up from 63% in June 2023.

A father’s injury

EvenUp was founded in 2019, more than two years before OpenAI’s ChatGPT launched the generative AI boom.

Karabibar, Raymond Mieszaniec, and Saam Mashhad started EvenUp to “even” the playing field for personal-injury victims. Founders and investors often cite the story of Mieszaniec’s father, Ray, to explain why their mission is important. He was disabled after being hit by a car, but his lawyer didn’t know the appropriate compensation, and the resulting settlement “was insufficient,” Lightspeed Venture Partners, one of EvenUp’s investors, said in a write-up about the company.

“We’ve trained a machine to be able to read through medical records, interpret the information it’s scanning through, and extract the critical pieces of information,” Mieszaniec said in an interview last year. “We are the first technology that has ever been created to essentially automate this entire process and also keep the quality high while ensuring these firms get all this work in a cost-effective manner.”

EvenUp technical errors

The eight former EvenUp employees told B-17 earlier this year that this process has been far from automated and prone to errors.

“You have to pretty much double-check everything the AI gives you or do it completely from scratch,” said one former employee.

For instance, the software has missed key injuries in medical records while hallucinating conditions that did not exist, according to some of the former employees. B-17 found no instances of these errors making it into the final product. Such mistakes, if not caught by human staff, could have potentially reduced payouts, three of the employees said.

EvenUp’s system sometimes recorded multiple hospital visits over several days as just one visit. If employees had not caught the mistakes, the claim could have been lower, one of the former staffers said.

The former employees recalled EvenUp’s AI system hallucinating doctor visits that didn’t happen. It also has reported a victim suffered a shoulder injury when, in fact, their leg was hurt. The system also has mixed up which direction a car was traveling — important information in personal-injury lawsuits, the former employees said.

“It would pull information that didn’t exist,” one former employee recalled.

The software has also sometimes left out key details, such as whether a doctor determined a patient’s injury was caused by a particular accident — crucial information for assigning damages, according to some of the employees.

“That was a big moneymaker for the attorneys, and the AI would miss that all the time,” one former employee said.

EvenUp’s spokesman acknowledged that these problems cited by former employees “could have happened,” especially in earlier versions of its AI, but said this is why it employs humans as a backstop.

A customer and an investor

EvenUp did not make executives available for interviews, but the spokesman put B-17 in touch with a customer and an investor.

Robert Simon, the cofounder of the Simon Law Group, said EvenUp’s AI has made his personal-injury firm more efficient, and using humans reduces errors.

“I appreciate that because I would love to have an extra set of eyes on it before the product comes back to me,” Simon said. “EvenUp is highly, highly accurate.”

Sarah Hinkfuss, a partner at Bain Capital Ventures, said she appreciated EvenUp’s human workers because they help train AI models that can’t easily be replicated by competitors like OpenAI and its ChatGPT product.

“They’re building novel datasets that did not exist before, and they are automating processes that significantly boost gross margins,” Hinkfuss wrote in a blog post earlier this year.

Long hours, less automation

Most of the former EvenUp employees said a major reason they were drawn to the startup was because they had the impression AI would be doing much of the work.

“I thought this job was going to be really easy,” said one of the former staffers. “I thought that it was going to be like you check work that the AI has already done for you.”

The reality, these people said, was that they had to work long hours to spot, correct, and complete tasks that the AI system could not handle with full accuracy.

“A lot of my coworkers would work until 3 a.m. and on weekends to try to keep up with what was expected,” another former employee recalled.

EvenUp’s AI could be helpful in simple cases that could be completed in as little as two hours. But more complex cases sometimes required eight hours, so a workday could stretch to 16 hours, four of the former employees said.

“I had to work on Christmas and Thanksgiving,” said one of these people. “They [the managers] acted like it should be really quick because the AI did everything. But it didn’t.”

EvenUp’s spokesman said candidates are told upfront the job is challenging and requires a substantial amount of writing. He said retention rates are “in line with other hyper-growth startups” and that 40% of legal operations associates were promoted in the third quarter of this year.

“We recognize that working at a company scaling this fast is not for everyone,” said the spokesman. “In addition, as our AI continues to improve, leveraging our technology will become easier and easier.”

Highlighting the continued importance of human workers, the spokesman noted that EvenUp hired a vice president of people at the end of October.