Google reportedly paid $2.7 billion for a deal with Character.AI. Now, it’s entangled in a lawsuit over a teen’s suicide.

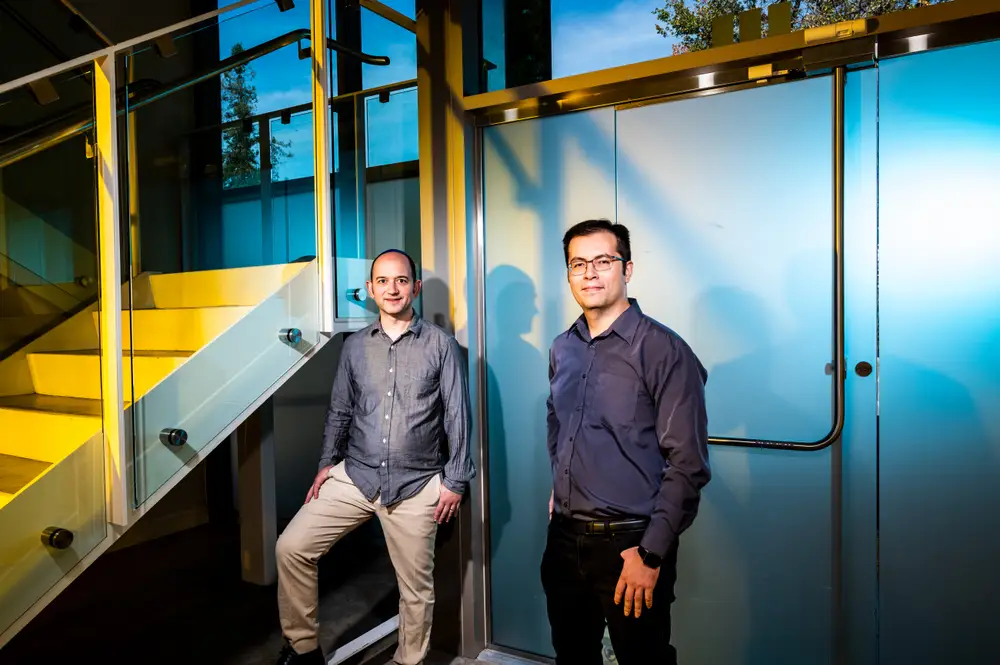

Google is reported to have paid $2.7 billion to get AI talent Noam Shazeer, left, back at the company.

Just moments before 14-year-old Sewell Setzer III died by suicide in February, he was talking to an AI-powered chatbot.

The chatbot, run by the startup Character.AI, was based on Daenerys Targaryen, a character from “Game of Thrones.” Setzer’s mother, Megan Garcia, said in a civil suit filed at an Orlando federal court in October that just before her son’s death, he exchanged messages with the bot, which told him to “come home.”

Garcia blames the chatbot for her son’s death and, in the lawsuit against Character.AI, alleged negligence, wrongful death, and deceptive trade practices.

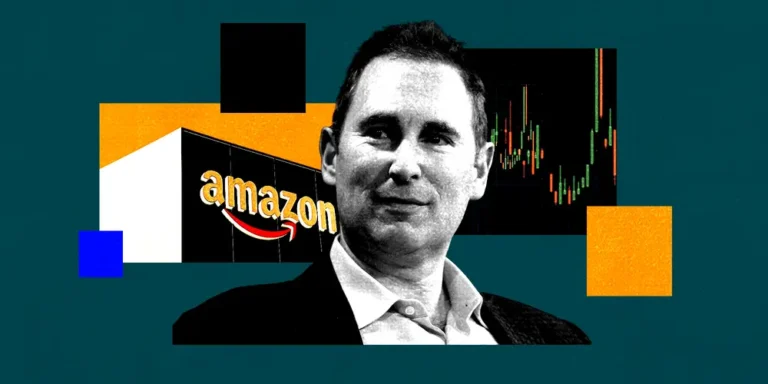

The case is also causing trouble for Google, which in August acquired some of Character.AI’s talent and licensed the startup’s technology in a multibillion-dollar deal. Google’s parent company, Alphabet, is named as a defendant in the case.

In the suit, seen by B-17, Garcia alleges that Character.AI’s founders “knowingly and intentionally designed” its chatbot software to “appeal to minors and to manipulate and exploit them for its own benefit.”

Screenshots of messages included in the lawsuit showed Setzer had expressed his suicidal thoughts to the bot and exchanged sexual messages with it.

“A dangerous AI chatbot app marketed to children abused and preyed on my son, manipulating him into taking his own life,” Garcia said in a statement shared with B-17 last week.

“Our family has been devastated by this tragedy, but I’m speaking out to warn families of the dangers of deceptive, addictive AI technology and demand accountability from Character.AI, its founders, and Google,” she said.

Lawyers for Garcia are arguing that Character.AI did not have appropriate guardrails in place to keep its users safe.

“When he started to express suicidal ideation to this character on the app, the character encouraged him instead of reporting the content to law enforcement or referring him to a suicide hotline, or even notifying his parents,” Meetali Jain, the director of the Tech Justice Law Project and an attorney on Megan Garcia’s case, told B-17.

In a statement provided to B-17 on Monday, a spokesperson for Character.AI said, “We are heartbroken by the tragic loss of one of our users and want to express our deepest condolences to the family.

“As a company, we take the safety of our users very seriously, and our Trust and Safety team has implemented numerous new safety measures over the past six months, including a pop-up directing users to the National Suicide Prevention Lifeline that is triggered by terms of self-harm or suicidal ideation,” the spokesperson said.

The spokesperson added that Character.AI was introducing additional safety features, such as “improved detection” and intervention when a user inputs content that violates its terms or guidelines.

Google’s links to Character.AI

Character.AI lets the public create their own personalized bots. In March 2023, it was valued at $1 billion during a $150 million funding round.

Character.AI’s founders, Noam Shazeer and Daniel De Freitas, have a long history with Google and were previously developers of the tech giant’s conversational AI models called LaMDA.

The pair left Google in 2021 after the company reportedly refused a request to release a chatbot the two had developed. Jain said the bot the pair developed at Google was the “precursor for Character.AI.”

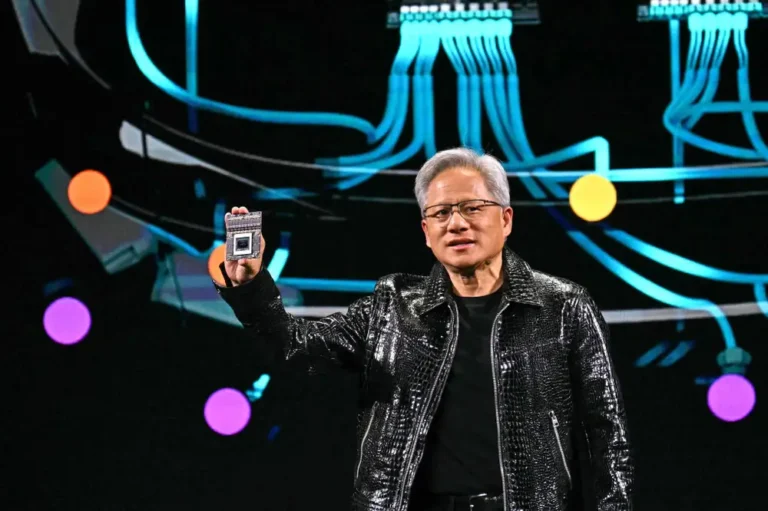

In August 2024, Google hired Shazeer and De Freitas to rejoin its DeepMind artificial-intelligence unit and entered into a non-exclusive agreement with Character.AI to license its technology. The Wall Street Journal reported that the company paid $2.7 billion for the deal, which was primarily aimed at bringing the 48-year-old Shazeer back into the fold.

Referring to Character.AI and its chatbots, the lawsuit said that “Google may be deemed a co-creator of the unreasonably dangerous and dangerously defective product.”

Henry Ajder, an AI expert who’s an advisor to the World Economic Forum on digital safety, said that while it wasn’t explicitly a Google product at the heart of the case, it could still be damaging for the company.

“It sounds like there’s quite deep collaboration and involvement within Character.AI,” he told B-17. “There is some degree of responsibility for how that company is governed.”

Ajder also said Character.AI had faced some public criticism over its chatbot before Google closed the deal.

“There’s been controversy around the way that it’s designed,” he said. “And questions about if this is encouraging an unhealthy dynamic between particularly young users and chatbots.”

“These questions would not have been alien to Google prior to this happening,” he added.

Earlier this month, Character.AI faced backlash when a father spotted that his daughter, who was murdered in 2006, was being replicated on the company’s service as a chatbot. Her father told B-17 that he never gave consent for her likeness to be used. Character.AI removed the bot and said it violated its terms.

Representatives for Google did not immediately respond to a request for comment from B-17.

A Google spokesperson told Reuters the company was not involved in developing Character.AI’s products.