Google Search is about to see ‘a pretty dramatic shift’

Google CEO Sundar Pichai speaks at Google I/O

While Google’s AI Search Overviews may have gotten off to a rocky start, the company is making no bones that it believes generative AI is the future of its core product.

The company just announced it will roll out a mishmash of new features that take Google Search even further from its traditional page of blue links. It will tap its Gemini AI models to change both how results appear and how users ask Google queries.

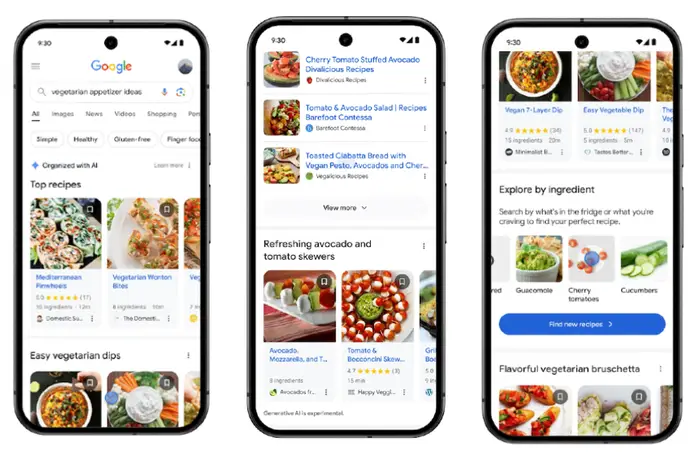

The most dramatic example is an entirely new Search experience that uses AI to organize the page’s layout, grouping results into different categories and pushing videos, forum links (think Reddit and Quora), and other widgets to the top of the page.

The result looks miles away from a single list of web pages.

“From a user perspective, this is a pretty dramatic shift from where we were before,” said Rhiannon Bell, vice president of user experience for Google Search, in a roundtable with reporters.

For now at least, Google sees this new search page as useful only for more open-ended queries that AI Overviews can’t answer directly. That’s why, to begin with, it will only activate when users search for recipe ideas—although it plans to roll it out to other topics over time.

The new search page will be just on mobile for now, although there’s every reason to expect this to be the standard experience on phones and desktops across Search in the future.

“The main thing to know is that really we see this experience being amazing for exploration and inspiration,” said Bell. “And so it’s really where there’s no right answer.'”

Google’s new AI-organized search results shake up the traditional layout.

She told B-17 that it will be personalized based on location to start, and Google plans to tweak the dials and hone results based on other data points (it’s unclear what).

“We can start categorizing this content for you in ways that we previously could not have,” Bell said.

Of course, it leads to questions about how Google Search rankings — an ever-changing, ever-elusive algorithm — will work with these new formats. SEOs and publishers have been scrambling to make sense of Google’s AI Overviews, and these further changes will raise more questions about how websites get visibility in the generative AI era.

It’s a difficult balancing act, but some good news for publishers: Google says it is introducing hyperlinks into the AI Overview results. Until now, AI answers only included links to the sources underneath, but now users will see links in the text that go directly to the source of the information. Google says early testing has shown increased traffic to the linked websites, although it declined to provide specific figures.

Google will also add ads to the AI Search Overviews, which will appear under answers where Google deems it “relevant to both the query and the answer provided.”

Using Google Lens to search

Google is upgrading Lens, a feature that uses the phone camera to search for information about what it sees.

Users can take a video and then ask Lens questions about the footage. For example, they might shoot a video of a bird and ask Lens to identify it, and Lens will spit out an AI-generated answer. Users can also simply point the camera at something and ask Lens a question using their voice in real time.

The additions seem like another step toward Google’s Project Astra demo from earlier this year, where the user in the video pointed their phone at various objects in a room and asked questions. The user was interacting with a chatbot built on Google’s Gemini, although the fundamental idea was the same: letting Google see and understand the world around you.

In addition, Google is bolstering its shopping features on Lens. Rather than just showing similar items when users point Lens at an object, it will now give more information when they search for an item, such as reviews and price comparisons across retailers.

“Today, 20% of all Google Lens searches are actually shopping-related, which is pretty interesting,” said Lens product director Lou Wang.