In defense of that ‘Goodbye Meta AI’ post that’s all over Instagram

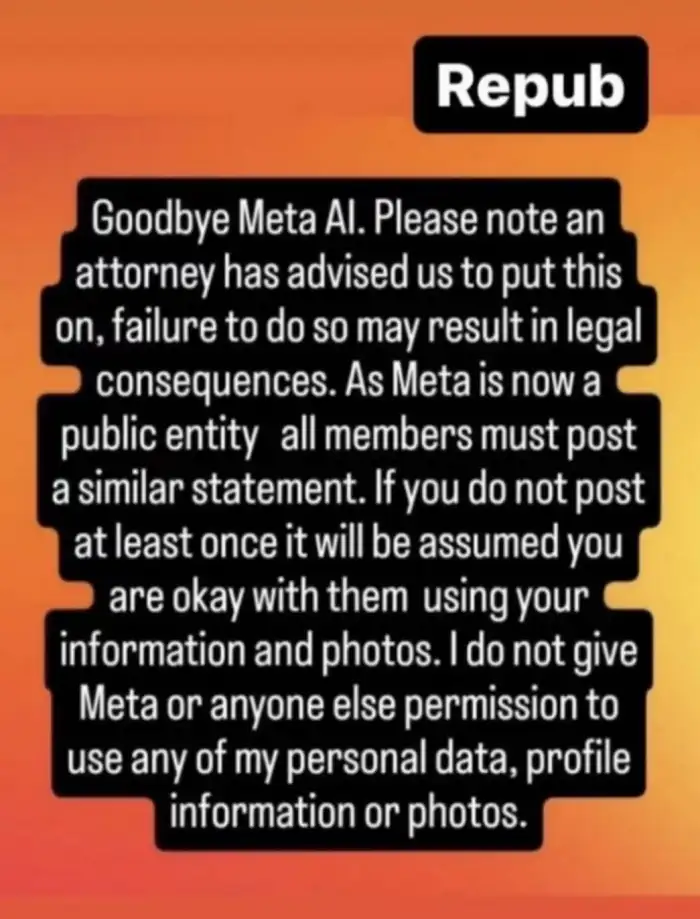

Can the “Goodbye Meta AI” copypasta that’s been floating around Instagram Stories — even getting posted by celebrities like Tom Brady, Cat Power, and James McAvoy — actually stop Meta from using people’s data to help train AI?

No, Meta says: The statements aren’t legally binding. (They’re jibberish, full of barely legalese language that should have been obvious to celebrities who have presumably seen and signed various contracts during their lifetime. But that’s just my two cents!)

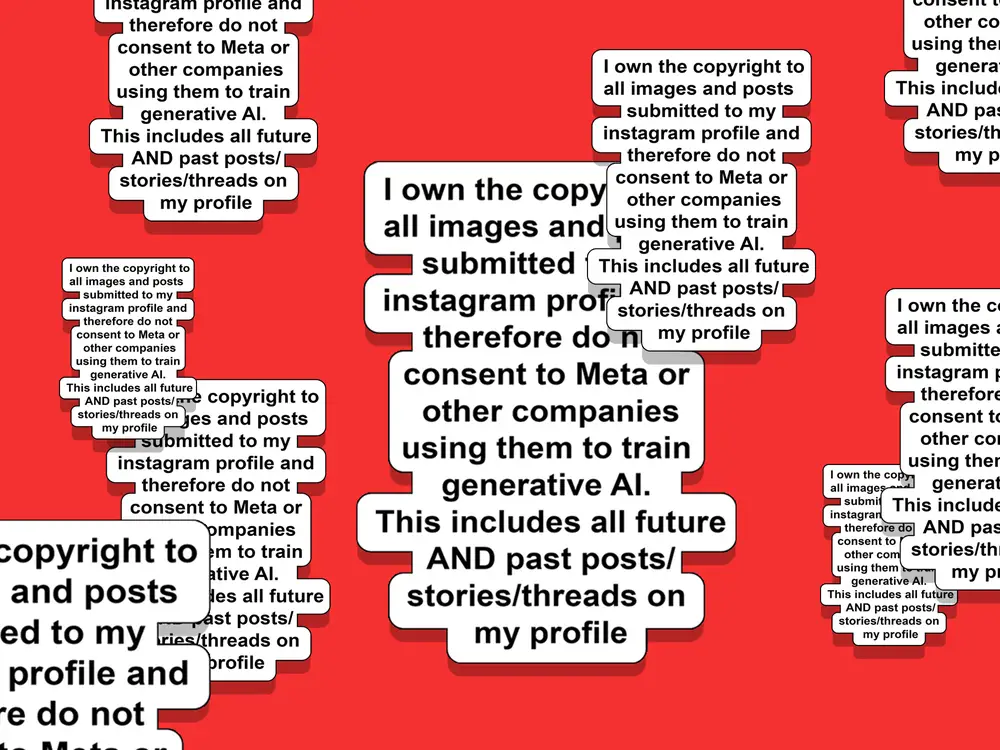

The “Goodbye Meta AI” copypasta that’s been floating around Instagram. It won’t change anything, Meta says.

Still, just because this goofy “copypasta” apparently has no bearing on Meta’s standing with its users doesn’t mean Meta shouldn’t take it seriously.

People are sharing it because they’re worried about AI and what it means for them. They think it’s some invasive thing that’s being done to them, taking away their content without their permission.

And this isn’t some paranoid delusion. It’s actually happening! Meta is indeed using public Facebook posts and photos going back as far as 2007 for training its AI models. There is no opt-out, either (except in the EU, where regulations require it) other than setting your posts to private.

This copypasta going around Instagram probably seems familiar. Versions of stuff like this have bubbled up every few years on Facebook — some copy/pasted text that claims to innoculate the user who posts it against some rapacious action of the tech overloads who run the place.

B-17 debunked these types of posts in 2015 — twice, and Facebook’s PR department even posted about them back in 2012. And you can find news reports discrediting posts like this when they surged again in 2016 and 2019.

Yes, these are all silly, poorly written, and clearly not legal documents. But they have always spoken to the power imbalance that some users feel on Facebook and Instagram: We love using these products, and we love using them for free, but we kind of feel like we’re being exploited somehow, whether or not that’s a fair assessment.

Facebook has had large and serious issues with user privacy — it’s no wonder some users are skeptical about it! People’s willingness to fall for these copypastas isn’t a sign that people are rubes; it’s a sign of how Facebook has sometimes failed to earn people’s trust over the years.

In an interview on the eve of the Meta Connect event this week, The Verge’s Alex Health directly asked Mark Zuckerberg if he understood why people feel weird about AI. The Meta CEO didn’t exactly answer:

Heath: But do you understand the concern people and creators have about training data and how it’s used — this idea that their data is being used for these models but they’re not getting compensated and the models are creating a lot of value? I know you’re giving away Llama, but you’ve got Meta AI. I understand the frustration that people have. I think it’s a naturally bad feeling to be like, “Oh, my data is now being used in a new way that I have no control or compensation over.” Do you sympathize with that?

Zuckerberg; Yeah. I think that in any new medium in technology, there are the concepts around fair use and where the boundary is between what you have control over. When you put something out in the world, to what degree do you still get to control it and own it and license it? I think that all these things are basically going to need to get relitigated and rediscussed in the AI era. I get it. These are important questions. I think this is not a completely novel thing to AI, in the grand scheme of things. There were questions about it with the internet overall, too, and with different technologies over time. But getting to clarity on that is going to be important, so that way, the things that society wants people to build, they can go build.

When it comes to using people’s Facebook or Instagram posts to train AI, people could have reason to feel uncomfortable. Meta should listen to this outpouring of some of its users’ sentiments: They feel weird and bad about their stuff being used to train AI.