It’s getting harder to make big leaps at the frontier of AI. There will be huge winners and losers.

Late last month, Elad Gil, one of the world’s top AI investors, tweeted out a chart.

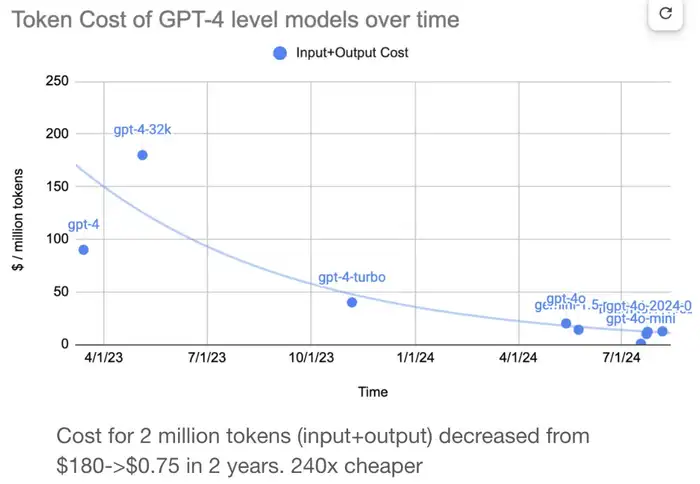

It shows the cost of “tokens,” which have become the raw material of generative AI. Chatbots and AI models break down words and other inputs into these tokens to make them easier to process and understand. One token is about ¾ of a word.

When AI companies are handling prompts and other model inputs, they often charge users based on a per-token price. And Gil, a leading venture capitalist, showed in the chart that token prices have plunged.

It’s an astounding collapse. In about 18 months, the cost of 1 million tokens has gone from $180 to just 75 cents.

A chart showing the per-token price of frontier AI models

The winners

Think of this as a yardstick for how much it costs to use AI models. The winners will be those that are heavy users. This revolutionary new resource is about 240x cheaper now. That saves startups, researchers, and others a lot of money.

Martin Casado, a respected AI expert at Andreessen Horowitz, compared this to the rapid decline of technology costs during previous innovation waves.

“A nice frame of reference is overlaying with the cost per bit over time for the Internet. Or the cost of compute during the rise of the microchip,” he tweeted. “Very clearly a supercycle where marginal costs of a foundational resource are going to zero.”

The losers

There’s another takeaway that’s more worrying.

Part of the reason token prices have plunged is that the supply of capable AI models has increased substantially. And the rules of supply and demand dictate that when this happens prices often fall.

Having a leading AI model is nowhere near as special as it was 18 months ago. Around that time, OpenAI’s GPT-4 emerged as a clear leader. Since then, Google released a new Gemini model that’s basically just as good. Anthropic has great models. X has Grok. And there are more than 100 others available on a closely watched industry leaderboard.

All these models are mostly trained on data that’s publicly available on the internet. So they’re not that much different from each other. Standing out in this crowd is increasingly difficult, so it stands to reason that the service they provide – processing tokens – is worth a lot less.

“The frontier is now a commodity,” Guillermo Rauch, CEO of AI startup Vercel, told me. “At one point, GPT-4 was in a class of its own, right? And they were charging, like, $180 per million tokens. Now, everybody else has a frontier model.”

Zuck’s influence

Mark Zuckerberg has already taken this to the ultimate extreme by making Meta’s Llama models free as open-source services. Startup Mistral has done the same.

“I think the one that disrupted all of this is Zuck,” Rauch said, noting that Meta succeeded with previous open-source efforts. One example is Facebook’s Open Compute Project, which standardized and lowered the price of data center infrastructure.

Zuckerberg has been dropping not-so-subtle hints about the crowded AI model market lately.

“I expect AI development will continue to be very competitive, which means that open sourcing any given model isn’t giving away a massive advantage over the next best models,” he wrote in a recent blog.

Translation: No AI model will be that much better than others, so why not give this stuff away.

Any time the price of something collapses, there are losers. This time it may be any companies that are still trying to build the best AI model and make money directly from that. One example is the implosion of startup Inflection AI this year, which spent oodles of time and money developing its own frontier model that failed to stand out in the crowd.

The answer and the problem

The answer is to just make a new AI model that’s way better than everyone else again, right? If you can pull this off, then you can maybe charge more for your token-processing services again.

The problem is that the cost of developing the next all-powerful model is sky-high and probably rising. Nvidia’s GPUs are still really expensive. Building or leasing the giant AI data centers required is only getting more costly. The price of electricity to power and cool these facilities is jumping.

The ROI from pouring massive capital into a new AI model is good — if you get a much better brain. If it’s only a little better than what’s already out there, that’s not so good, Rauch explained.

The big GPT-5 question

Beyond cost, AI experts are beginning to debate whether it’s also harder to make new models considerably better.

OpenAI launched its GPT-1 AI model in June 2018. After only 8 months, GPT-2 came out. Then it took 16 months to release GPT-3. And it was about 33 months before GPT-4 emerged.

“These huge jumps in brain power, in reasoning power. It’s becoming harder to attain,” Rauch told me. “That is a fact.”

There are big expectations for OpenAI’s GPT-5. Many in the industry expected this to come out in the summer. Summer is almost over and it’s not here yet.

Maybe GPT-5 will blow away the competition when it finally comes out. And OpenAI will be able to charge much higher per-token prices. We’ll see.