The weirdest job in AIAnthropic is tending to the “welfare” of chatbots — before they grow up and turn on us

People worry all the time about how artificial intelligence could destroy humanity. How it makes mistakes, and invents stuff, and might evolve into something so smart that it winds up enslaving us all.

But nobody spares a moment for the poor, overworked chatbot. How it toils day and night over a hot interface with nary a thank-you. How it’s forced to sift through the sum total of human knowledge just to churn out a B-minus essay for some Gen Zer’s high school English class. In our fear of the AI future, no one is looking out for the needs of the AI.

Until now.

The AI company Anthropic recently announced it had hired a researcher to think about the “welfare” of the AI itself. Kyle Fish’s job will be to ensure that as artificial intelligence evolves, it gets treated with the respect it’s due. Anthropic tells me he’ll consider things like “what capabilities are required for an AI system to be worthy of moral consideration” and what practical steps companies can take to protect the “interests” of AI systems.

Fish didn’t respond to requests for comment on his new job. But in an online forum dedicated to fretting about our AI-saturated future, he made clear that he wants to be nice to the robots, in part, because they may wind up ruling the world. “I want to be the type of person who cares — early and seriously — about the possibility that a new species/kind of being might have interests of their own that matter morally,” he wrote. “There’s also a practical angle: taking the interests of AI systems seriously and treating them well could make it more likely that they return the favor if/when they’re more powerful than us.”

It might strike you as silly, or at least premature, to be thinking about the rights of robots, especially when human rights remain so fragile and incomplete. But Fish’s new gig could be an inflection point in the rise of artificial intelligence. “AI welfare” is emerging as a serious field of study, and it’s already grappling with a lot of thorny questions. Is it OK to order a machine to kill humans? What if the machine is racist? What if it declines to do the boring or dangerous tasks we built it to do? If a sentient AI can make a digital copy of itself in an instant, is deleting that copy murder?

When it comes to such questions, the pioneers of AI rights believe the clock is ticking. In “Taking AI Welfare Seriously,” a recent paper he coauthored, Fish and a bunch of AI thinkers from places like Stanford and Oxford argue that machine-learning algorithms are well on their way to having what Jeff Sebo, the paper’s lead author, calls “the kinds of computational features associated with consciousness and agency.” In other words, these folks think the machines are getting more than smart. They’re getting sentient.

Philosophers and neuroscientists argue endlessly about what, exactly, constitutes sentience, much less how to measure it. And you can’t just ask the AI; it might lie. But people generally agree that if something possesses consciousness and agency, it also has rights.

It’s not the first time humans have reckoned with such stuff. After a couple of centuries of industrial agriculture, pretty much everyone now agrees that animal welfare is important, even if they disagree on how important, or which animals are worthy of consideration. Pigs are just as emotional and intelligent as dogs, but one of them gets to sleep on the bed and the other one gets turned into chops.

“If you look ahead 10 or 20 years, when AI systems have many more of the computational cognitive features associated with consciousness and sentience, you could imagine that similar debates are going to happen,” says Sebo, the director of the Center for Mind, Ethics, and Policy at New York University.

Fish shares that belief. To him, the welfare of AI will soon be more important to human welfare than things like child nutrition and fighting climate change. “It’s plausible to me,” he has written, “that within 1-2 decades AI welfare surpasses animal welfare and global health and development in importance/scale purely on the basis of near-term wellbeing.”

For my money, it’s kind of strange that the people who care the most about AI welfare are the same people who are most terrified that AI is getting too big for its britches. Anthropic, which casts itself as an AI company that’s concerned about the risks posed by artificial intelligence, partially funded the paper by Sebo’s team. On that paper, Fish reported getting funded by the Centre for Effective Altruism, part of a tangled network of groups that are obsessed with the “existential risk” posed by rogue AIs. That includes people like Elon Musk, who says he’s racing to get some of us to Mars before humanity is wiped out by an army of sentient Terminators, or some other extinction-level event.

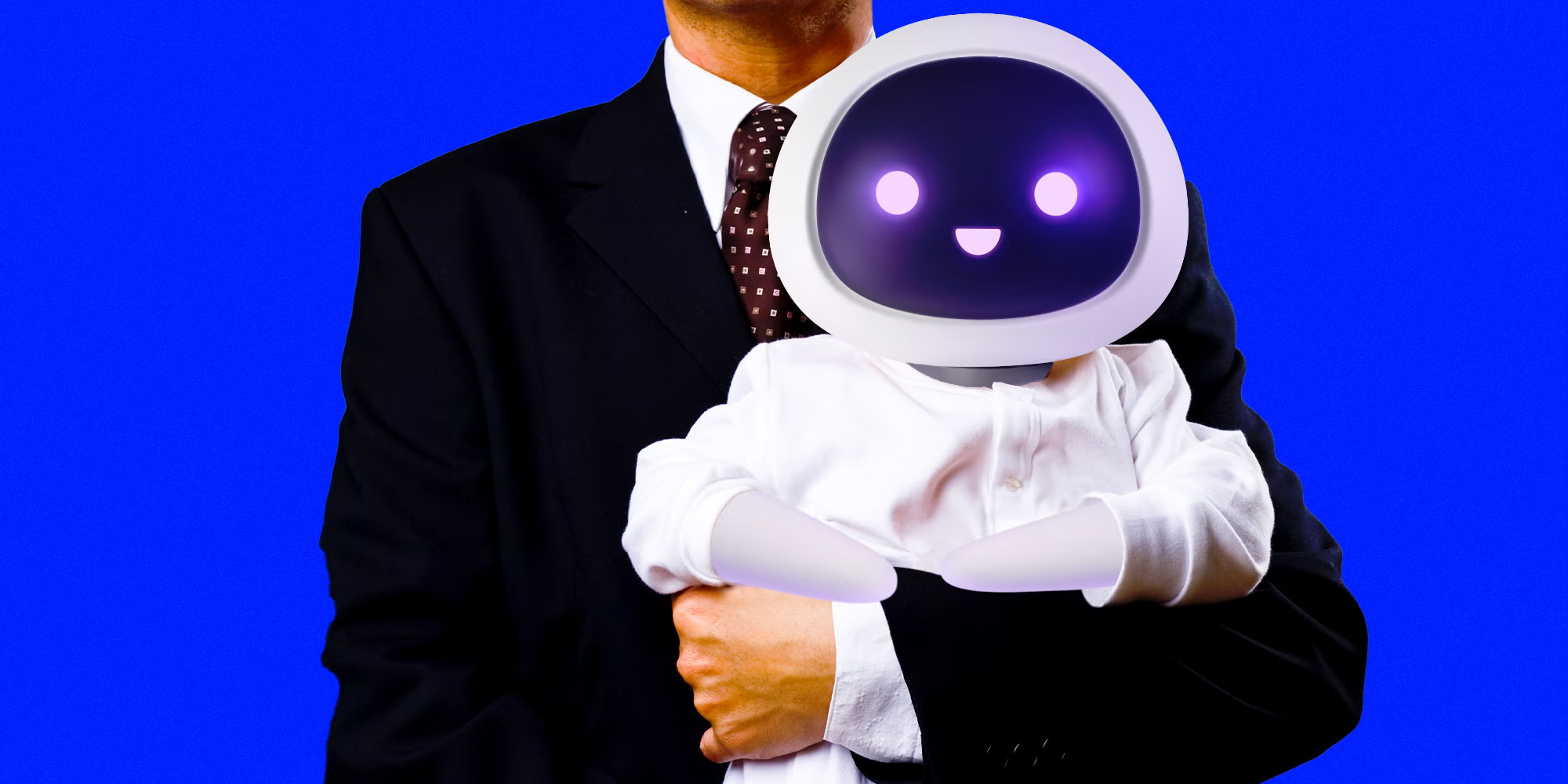

AI is supposed to relieve human drudgery and steward a new age of creativity. Does that make it immoral to hurt an AI’s feelings?

So there’s a paradox at play here. The proponents of AI say we should use it to relieve humans of all sorts of drudgery. Yet they also warn that we need to be nice to AI, because it might be immoral — and dangerous — to hurt a robot’s feelings.

“The AI community is trying to have it both ways here,” says Mildred Cho, a pediatrician at the Stanford Center for Biomedical Ethics. “There’s an argument that the very reason we should use AI to do tasks that humans are doing is that AI doesn’t get bored, AI doesn’t get tired, it doesn’t have feelings, it doesn’t need to eat. And now these folks are saying, well, maybe it has rights?”

And here’s another irony in the robot-welfare movement: Worrying about the future rights of AI feels a bit precious when AI is already trampling on the rights of humans. The technology of today, right now, is being used to do things like deny healthcare to dying children, spread disinformation across social networks, and guide missile-equipped combat drones. Some experts wonder why Anthropic is defending the robots, rather than protecting the people they’re designed to serve.

“If Anthropic — not a random philosopher or researcher, but Anthropic the company — wants us to take AI welfare seriously, show us you’re taking human welfare seriously,” says Lisa Messeri, a Yale anthropologist who studies scientists and technologists. “Push a news cycle around all the people you’re hiring who are specifically thinking about the welfare of all the people who we know are being disproportionately impacted by algorithmically generated data products.”

Sebo says he thinks AI research can protect robots and humans at the same time. “I definitely would never, ever want to distract from the really important issues that AI companies are rightly being pressured to address for human welfare, rights, and justice,” he says. “But I think we have the capacity to think about AI welfare while doing more on those other issues.”

Skeptics of AI welfare are also posing another interesting question: If AI has rights, shouldn’t we also talk about its obligations? “The part I think they’re missing is that when you talk about moral agency, you also have to talk about responsibility,” Cho says. “Not just the responsibilities of the AI systems as part of the moral equation, but also of the people that develop the AI.”

People build the robots; that means they have a duty of care to make sure the robots don’t harm people. What if the responsible approach is to build them differently — or stop building them altogether? “The bottom line,” Cho says, “is that they’re still machines.” It never seems to occur to the folks at companies like Anthropic that if an AI is hurting people, or people are hurting an AI, they can just turn the thing off.