This chart shows one potential advantage AWS’s AI chips have over Microsoft and Google

Big tech cloud providers have been investing in their own homegrown AI chips lately, in hopes of replicating Nvidia’s success.

Amazon Web Services have worked on Trainium and Inferentia processors for several years, while Google unveiled its sixth generation TPU line of AI chips this year. Microsoft also introduced its first in-house AI accelerator, Maia, in 2023.

It may still be too early to tell which company is doing better, given the lack of financial disclosure by each company and Nvidia’s dominance with its GPUs.

But according to Gil Luria, a tech analyst at D.A. Davidson, AWS’s AI chips may have one potential advantage: more regional availability.

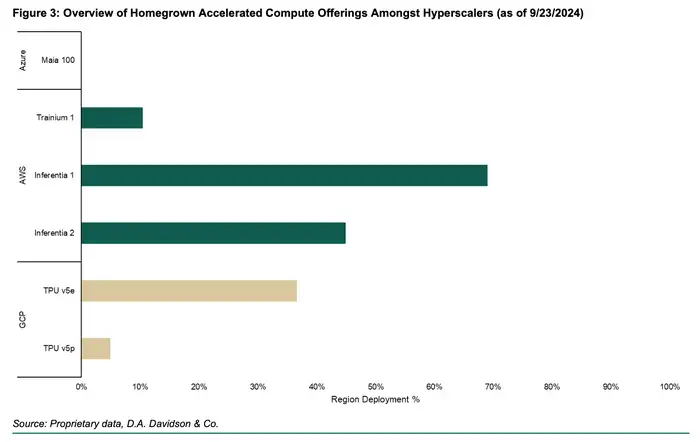

AWS’s Inferentia, which is for the inference stage of AI, is more widely available than Google’s competing v5e TPU chips, according to data shared by Luria in a research note this week.

AWS’s AI training chip, Trainium, also had a higher rate of regional availability than its TPU counterpart. Microsoft’s Maia has no external deployment because it currently only serves OpenAI-related workloads, Luria wrote.

Regional deployment rate of AI chips by Microsoft, Amazon, and Google

Making these chips available in more regions is important because it gives customers a diversity of options, Luria told B-17. Not every customer needs Nvidia’s GPUs, which can be significantly more expensive than other processors, he said.

“The high level of availability of home-grown chips at AWS data centers means they can give their customers choice,” Luria said. “Customers with very demanding training needs may choose to use a cluster of Nvidia chips at the AWS data center, and customers with more straightforward needs can use Amazon chips at a fraction of the cost.”

AWS spokesperson Patrick Neighorn told B-17 in an email, “We strive to provide customers the choice of compute that best meets the needs of their workload, and we’re encouraged by the progress we’re making with AWS silicon.”

Spokespeople for Google and Microsoft declined to comment.

Any kind of edge would be important for AWS. The company has doubled down on its silicon business lately, but its AI chips have so far seen mixed results against Nvidia’s dominance. It’s early days still, though.

The data point may have some flaws. For example, AWS’s first Inferentia offering launched in 2018, while Google’s latest TPU v5e and v5p chips became available within the past year, meaning AWS’s chip had more time to reach its current deployment level. Some would also argue that regional deployment is not necessarily a sign of effectiveness, as some customers prefer having chip capacity concentrated in a single region.

Luria wrote in his note that Google’s TPU v5e is the “most mature accelerator offering” of the three largest cloud providers.

Still, the broader point is that AWS and Google Cloud have “much more mature homegrown silicon” than Microsoft based on their deeper regional penetration rates, Luria noted.

Microsoft is “at a disadvantage to both Amazon and Google” in this space because of Maia’s limited availability, he added.